The Enterprise AI Risk Index: Q4 Briefing

The Enterprise AI Risk Index: Q4 Briefing

Why Q4 Exposes What Q1 Decisions Created—And What Executives Must Do Now

Every enterprise is running an AI experiment. The question is whether you know what's actually at stake.

As 2025 draws to a close, enterprise AI has reached an inflection point. Workforce adoption has surged from 22% to 75% in just two years. The enterprise AI governance market has expanded from $400 million to $2.2 billion—a 450% increase—and is projected to reach $4.9 billion by 2030. But here's what should concern every executive reading this: 74% of organizations still struggle to translate AI investments into meaningful business outcomes, and 47% of companies using generative AI have already experienced significant problems ranging from hallucinated outputs to data exposure and IP leakage.

This isn't a technology problem. It's a governance and accountability problem expressed through technology.

The Enterprise AI Risk Index provides a quarterly, decision-oriented view of where AI risk concentrates as systems move from experimentation to operational dependency. This briefing is designed for CIOs, COOs, General Counsel, and board subcommittees who need to understand not just what's happening, but where executive intervention is required.

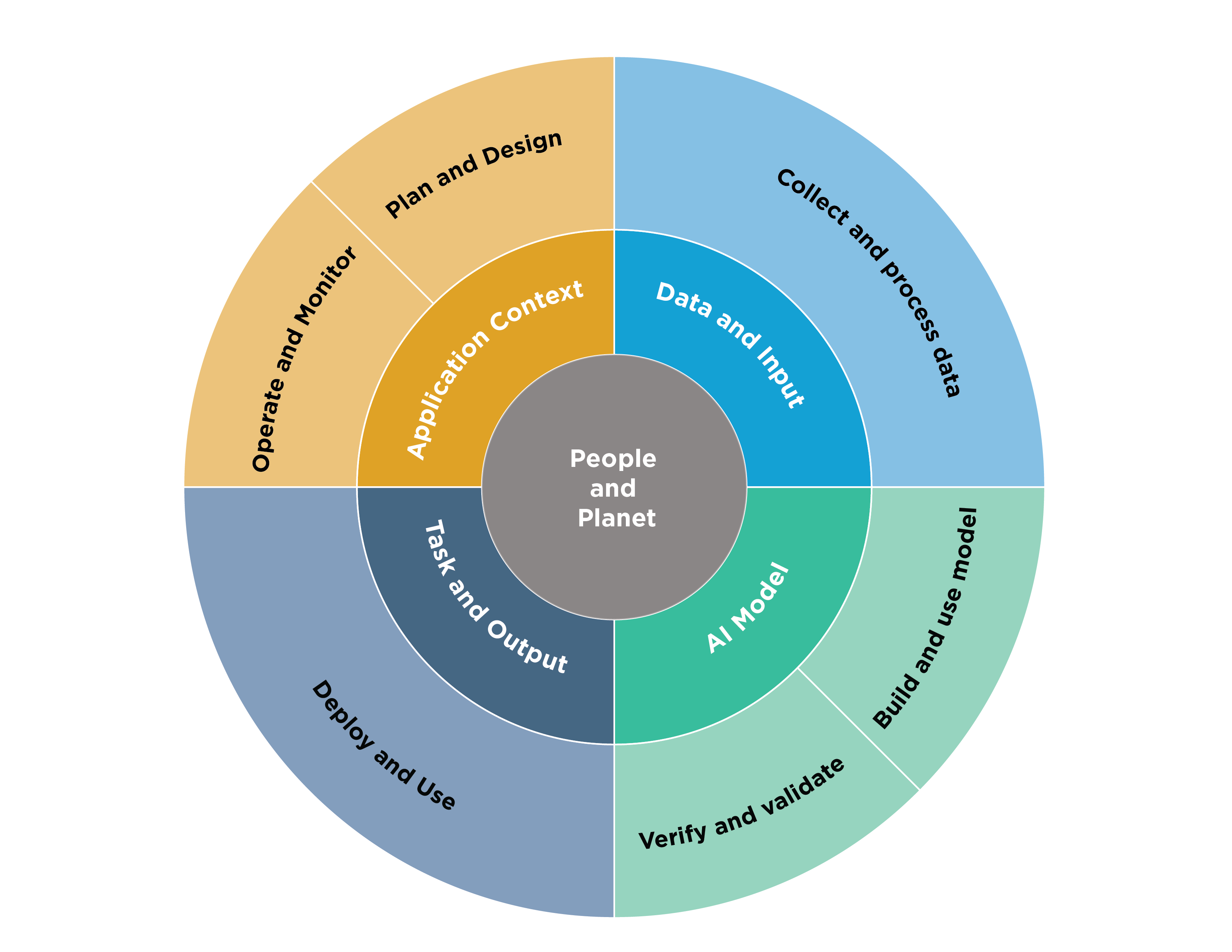

The Annual AI Risk Lifecycle

Enterprise AI risk doesn't emerge randomly. It accumulates in predictable phases aligned to budgeting, scaling, and optimization cycles. Organizations that manage AI risk effectively intervene before Q4, not during it.

Across organizations, four risk patterns consistently surface. In Q1, commitments are made before constraints are defined. In Q2, integration outpaces visibility. By Q3, dependence increases faster than resilience. And in Q4, exposure becomes unavoidable.

| Quarter | Primary Risk Theme | Executive Concern | Risk Level |

|---|---|---|---|

| Q1 | Expansion without constraint | Irreversible decisions | Moderate |

| Q2 | Complexity without visibility | Hidden systemic exposure | High |

| Q3 | Reliance without resilience | Business continuity risk | High |

| Q4 | Exposure and accountability | Regulatory, reputational, operational impact | Critical |

Understanding this lifecycle isn't academic—it's operational. The decisions made in January become the exposures audited in December. The integrations rushed in April create the blind spots discovered in October. The dependence normalized in August produces the failures examined in November.

Q1: Planning & Expansion Phase

The Risk Profile

The highest risk domains in Q1 center on strategic alignment, architecture and dependency decisions, and governance bypass. Early AI decisions are made for speed and signaling, not durability. Vendor selection, architectural patterns, and governance exceptions become embedded before oversight matures.

This is when irreversible decisions get locked in. A vendor contract signed in February. An architectural pattern chosen in March because it was fastest, not because it was right. A governance exception granted "just this once" that becomes permanent practice. The data shows this clearly: by 2027, Gartner projects that over 40% of AI-related data breaches will stem from unapproved or improper generative AI use—and those seeds are planted in Q1.

Executive Actions Required

Three interventions are critical during this phase. First, require explicit business ownership for every AI initiative—no orphaned experiments. Second, enforce architecture abstraction requirements that prevent lock-in to any single vendor or model. Third, block production deployment of any AI system that hasn't received governance sign-off. These aren't bureaucratic hurdles; they're the insurance policies that prevent Q4 crises.

Q2: Integration & Scale Phase

The Risk Profile

The highest risk domains shift to integration complexity, data flow and lineage, and security and access control. AI systems become deeply embedded into core workflows without full understanding of data movement, access boundaries, or failure paths. This is hidden systemic exposure—the risk you don't see until something breaks.

The numbers are stark: corporate data pasted or uploaded into AI tools rose by 485% between 2023 and 2024. Employees increasingly rely on personal cloud applications for work, with 88% using them monthly and 26% uploading or sending corporate data through them. These create pathways where sensitive information reaches AI systems outside enterprise oversight—an expanding parallel ecosystem of ungoverned data flows that traditional IT cannot see.

Executive Actions Required

Q2 demands visibility investments. Demand comprehensive AI system dependency mapping—know what connects to what, and what breaks when something fails. Require data lineage documentation for every AI-integrated workflow. Enforce least-privilege access controls across all AI systems. If you can't map it, you can't manage it. If you can't manage it, you can't defend it in Q4.

Q3: Optimization & Dependency Phase

The Risk Profile

The highest risk domains now concentrate around operational dependency, human oversight erosion, and model drift. Operational reliance on AI outpaces preparedness for failure, drift, or external scrutiny. Human judgment degrades as performance normalizes. This is the business continuity risk phase.

Here's the uncomfortable truth: 80% of organizations now have a dedicated AI risk function, according to IBM's Institute for Business Value. But having a function doesn't mean it's effective. Nearly 31% of boards still don't treat AI as a standing agenda item, and 66% report little to no experience with AI topics. The gap between operational dependence and governance capability is widest in Q3.

Executive Actions Required

Three actions become essential. Require documented fallback and degraded-mode plans for every AI system supporting critical operations. Mandate periodic AI output audits—not just performance metrics, but accuracy and drift assessments. Reinforce human escalation thresholds that were probably loosened during Q2's integration push. The goal isn't to slow things down; it's to ensure you can recover when things go wrong.

Q4: Exposure & Accountability Phase

The Risk Profile

Q4 brings the highest risk concentration across trust, governance, data exposure, and operational failure. AI systems face audit, regulatory, and reputational scrutiny. Informal controls are no longer defensible. This is where regulatory, reputational, and operational risks converge.

The regulatory environment has never been more demanding. The EU AI Act imposes fines up to €20 million or 4% of global turnover for non-compliance with high-risk AI provisions. The NIST AI Risk Management Framework, ISO/IEC 42001, and emerging state-level requirements create a multi-jurisdictional compliance landscape that informal practices cannot navigate. And regulators aren't the only stakeholders watching—customers, partners, and boards are increasingly demanding visibility into AI decision-making processes.

Executive Actions Required

Q4 is not the time for expansion; it's the time for consolidation and accountability. Freeze AI scope expansion until current systems are fully documented and auditable. Conduct comprehensive audits of all active AI systems and vendor relationships. Establish unambiguous accountability chains and tested incident response procedures. The organizations that survive Q4 scrutiny are those that treated governance as an operating condition throughout the year, not those scrambling to document what they should have controlled all along.

Understanding the Risk Scoring Framework

The Enterprise AI Risk Index uses a four-level scoring system designed for executive decision-making, not technical analysis.

| Score | Risk Level | Interpretation |

|---|---|---|

| 1 | Low | Controlled, documented, and auditable |

| 2 | Moderate | Inconsistent controls across the organization |

| 3 | High | Informal or reactive management only |

| 4 | Critical | No clear ownership or safeguards in place |

Three interpretation principles matter for executives. First, any score of 3 or higher requires direct executive attention—these aren't issues for working groups to address. Second, trends matter more than single-quarter values; a domain moving from 2 to 3 demands more attention than one stable at 3. Third, Q4 scores reflect accumulated exposure from earlier quarters, not sudden failures—which means they also reflect the quality of earlier interventions.

The Strategic Imperative

The case for robust AI governance has never been more compelling. But governance gaps extend all the way to the boardroom. The challenge isn't awareness—58% of executives say strong Responsible AI practices improve ROI and operational efficiency, and 55% link RAI to better customer experience and innovation. The challenge is execution: translating principles into repeatable workflows, guardrails, audits, and monitoring frameworks.

The organizations that succeed are not those with the most sophisticated models or the largest AI investments. They're the organizations that intervene early, enforce discipline quarterly, and treat AI risk as an operating condition rather than an exception to be managed when convenient.

Q4 reveals everything. It exposes the shortcuts taken in Q1, the blind spots created in Q2, and the dependencies normalized in Q3. But it also rewards the organizations that got ahead of these risks—that built governance into their AI operations from the start, not as an afterthought when regulators came calling.

The question isn't whether your organization will face AI risk. The question is whether you'll face it prepared—or scrambling.